参考文档

NV-NIM:

NV-NIM

介绍

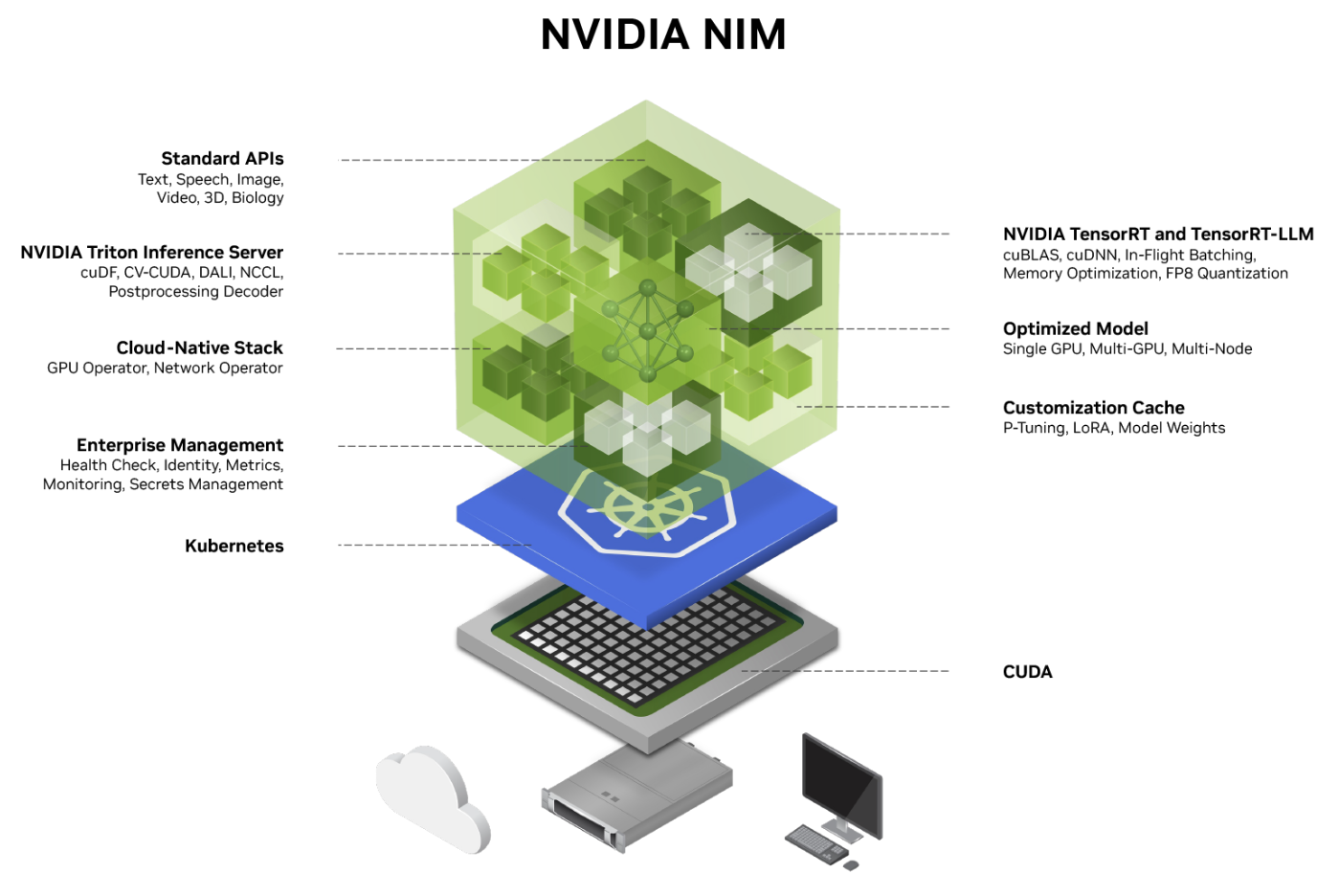

NV-NIM 的生态以NV为主,如果想极限的吞吐并且设备多为Nvidia就可以NIM

支持的推理引擎有:

- TensorRT

- TensorRT-LLM

- PyTorch

- vLLM

- SGLang

- 等推理引擎

适合 k8s云原生的方案也适合个人用户,一键启动服务并构建agent等应用

NIM不单单能部署大模型还能部署一些其他的AI模型

缺陷

需要商业的授权,即 NVIDIA AI Enterprise 授权

授权说明:

Members of the NVIDIA Developer program have free access to NIM API endpoints for prototyping, and to downloadable NIM microservices for research, application development, and experimentation on up to 16 GPUs on any infrastructure—cloud, data center, or personal workstation. Access to NIM microservices is available through the duration of program membership.

Downloadable NIM access is free for research, development and testing via the NVIDIA Developer program. Once you are ready to move to production, you’ll need an NVIDIA AI Enterprise license that can be acquired via any of our partners. The pricing model for NVIDIA AI Enterprise begins at $4500 per GPU per year. You can register and apply for an NVIDIA AI Enterprise License here.

也就是说免费最多16 GPUS且不能对外服务,授权的价格是一个GPU一年$4500

这价格就有点贵了,但是毕竟NV提供了黑魔法让模型加速,收服务费合理。这个就需要场景来选择了。

使用

使用 nim 来部署llm模型 ,进行推理实现

目的

支持的模型目录: